Elections in the US are just around the corner, and Microsoft has come up with its share of technology to prevent misinformation in these crucial times. The company has announced a new deepfake detection tool called Microsoft Video Authenticator that will help in spotting AI-generated synthetic images and videos on the internet.

For the uninitiated, Deepfake technology is used to swap people’s faces in videos and make them say things they never said. Although the technology has its positive side, Deepfakes are infamous for being a well-spring of fake porn videos featuring celebrities and face-swapped videos of politicians saying controversial things.

Moreover, Deepfake tech is getting better as we speak, and it’s getting harder to detect even for the experts. That’s where we think of putting AI against AI in an attempt to spot deepfakes.

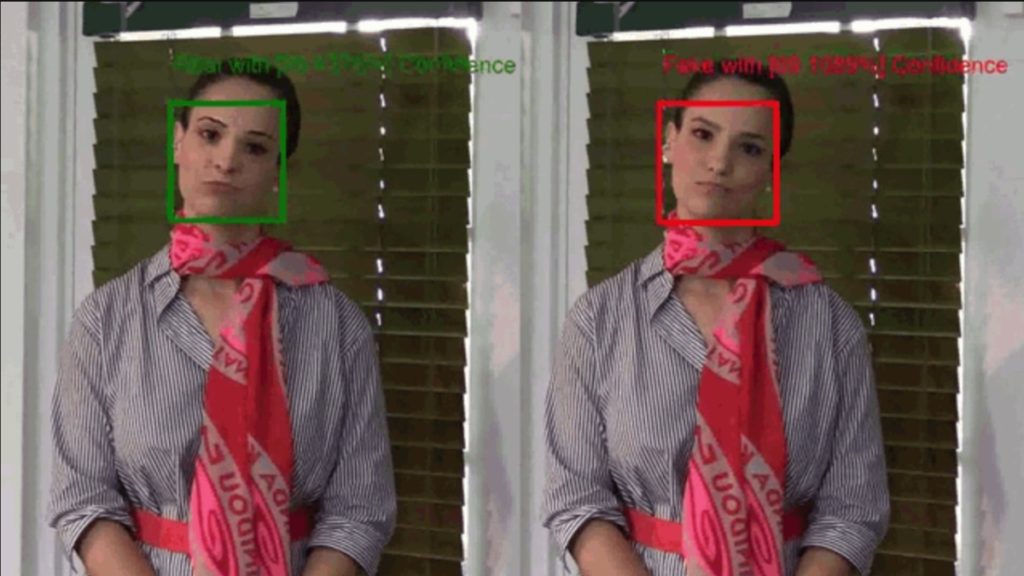

Microsoft Video Authenticator analyzes a given image or video and comes up with what Microsoft calls a confidence score – a percentage score to tell if the media content is artificially manipulated.

The tool can analyze a video and provide a confidence score for each frame in real-time. Microsoft Research developed it in collaboration with Microsoft’s Responsible AI team and AETHER committee.

Microsoft said in a blog post that the tool “works by detecting the blending boundary of the deepfake and subtle fading or greyscale elements that might not be detectable by the human eye.”

Redmond has also announced a tool that integrates with Microsoft Azure and allows content publishers to insert digital hashes and certificates into their content. This data can then be read by a browser extension (reader) to verify the content’s authenticity. The BBC recently announced the implementation of this tool in the form of Project Origin.

The post Microsoft’s Deepfake Detection Tool Uses ‘Confidence Score’ To Find Fake Content appeared first on Fossbytes.

from Fossbytes https://ift.tt/2DlTod7

via IFTTT

No comments:

Post a Comment